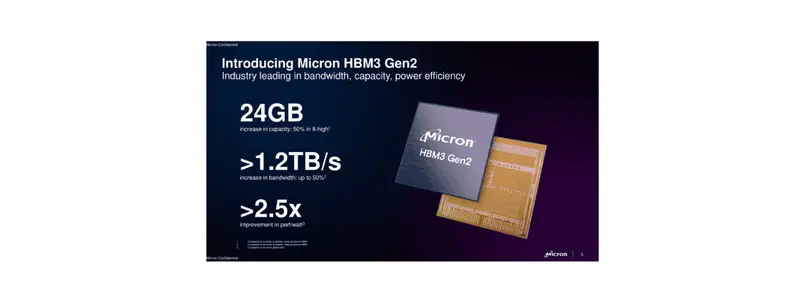

Micron is announcing its newest version of high-bandwidth memory (HBM) for AI accelerators and high-performance computing (HPC). The company had previously offered HBM2 modules, but its newest memory technology is so advanced it’s skipping right over first-generation HBM3 and calling it HBM3 Gen2.Micron has announced that it’s currently sampling its new 24GB HBM3 Gen2 memory to its customers, with the new high-speed memory featuring 1.2 TB/s of memory bandwidth. Micron notes that HBM3 Gen2 delivers 2.5 times greater performance per watt than previous generations, making it ideal for AI data centres where performance, capacity, and efficiency are important. These Micron improvements reduce training times of large language models like GPT-4 and beyond, deliver efficient infrastructure use for AI inference and provide superior total cost of ownership (TCO).

The 8-high stack of 24GB of HBM3 Gen2 has increased pin speed to over 9.2Gb/s, a 50% improvement over existing HBM3 solutions. Over a 1024-bit bus, you’ve got 1.2 TB/s of bandwidth, which is a massive improvement over the current 820 GB/s found in existing HBM3.