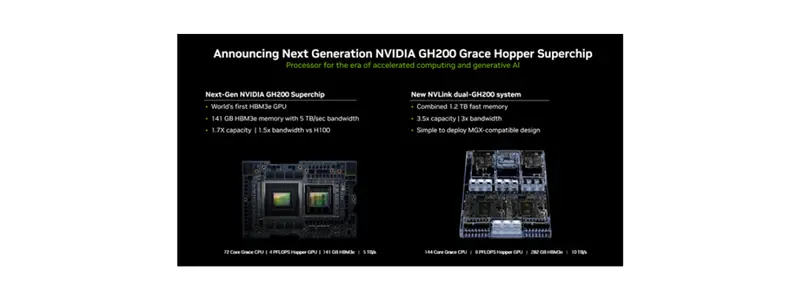

NVIDIA recently announced its latest innovation, the next-generation NVIDIA GH200 Grace Hopper platform. This platform centers around an innovative Grace Hopper Superchip, featuring the world’s pioneering HBM3e processor. NVIDIA’s latest announcement comes on top of the previously announced GH200 with HBM3, which is now in production and scheduled to hit the market later this year. This suggests that NVIDIA will release two versions of the same device, with the model integrating HBM3 and the model with HBM3e following later.The new GH200 Grace Hopper Superchip is built on a 72-core Grace CPU with 480 GB of ECC LPDDR5X memory and a GH100 computing GPU with 141 GB of HBM3E memory in six 24 GB stacks and a 6,144-bit memory interface. Even though NVIDIA physically installs 144 GB of memory, only 141 GB is available for improved yields.

The new platform uses the Grace Hopper Superchip, which can be connected with additional Superchips by NVIDIA NVLink™, allowing them to work together to deploy the giant models used for generative AI. This high-speed, coherent technology gives the GPU full access to the CPU memory, providing a combined 1.2TB of fast memory when in dual configuration. HBM3e memory, which is 50% faster than current HBM3, delivers a total of 10TB/sec of combined bandwidth, allowing the new platform to run models 3.5x larger than the previous version, while improving performance with 3x faster memory bandwidth. The new dual GH200 board takes this advantage to the next level, combining two Grace Hopper superchips connected by NVLink on a single board, including the fast LPDDR5 memory which is lower cost and consumes far less energy compared to an x86 server. And of course, this platform can scale to 256 GPUs over NVLink.